Brief intro on Ethereum scaling & zk-proofing

Ethereum is the largest, and in many respects, the most credible smart contract blockchain. Ethereum has the most decentralized consensus in the smart contract blockchain space, backed by “Ethereum values” such as credible neutrality — an open garden for anyone to build permissionlessly. It's not surprising this environment attracts the most talent and projects.

The demand for Ethereum has increased, making the network expensive to use. Ethereum L1 can only handle around 15 transactions per second. Thus, scaling Ethereum has become especially critical and drawn lot of talent and teams.

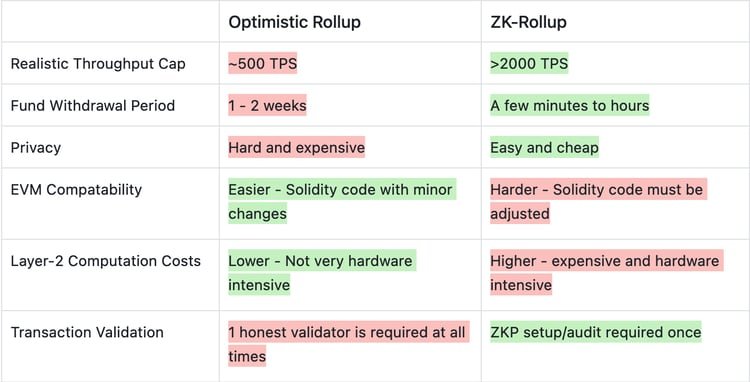

As we know, Ethereum follows Roll-up centric scaling roadmap. This involves L2 rollups that improve throughput and reduce the burden on Ethereum L1. There are two main types of rollups: optimistic rollups and zk-rollups.

Optimistic rollups rely on the assumption that most transactions are valid (with the future implementation of fraud proofs). They allow transactions to be processed off-chain (off the mainnet) and then submitted to Ethereum L1 in a compressed format, reducing gas costs and increasing throughput.

In contrast, zk-rollups, short for Zero-Knowledge Rollups, provide a trustless and more secure way of scaling Ethereum. ZK-Rollups use cryptography and rely on math to prove the validity of transactions. ZK-rollups batch a bunch of transactions together, calculate a ZK proof of these program execution traces, and finally submit the proof to Ethereum L1 mainnet. The key thing is that verifying this proof on L1 mainnet is significantly faster than re-executing the transactions, thus providing scaling benefits.

Thus, it’s not a surprise that some Optimistic rollups are taking steps to adopt zk-proofs. Besides this, certain entirely different Layer-1 (L1) blockchains are also considering converting themselves from independent L1 to an L2 rollup under Ethereum.

However, while the proofing systems for ZK-Rollups have developed fast, there's also a shared understanding that some of the proof systems won't be bug-free for years. Thus, certain rollups are exploring multiple parallel proving systems as a secure path ahead.

One of the most interesting paradigm shifts has been ZK-VMs, which can essentially create ZK-proof for any computer program, even for the entire EVM (Ethereum Virtual Machine).

ZK-VMs have been around for a while. TinyRAM in 2013 was the first research-driven project. Later, around 2021, in the blockchain space, Cairo (Starknet), Zinc (zkSync), and Polygon ZK-EVM (or Miden) were the next major projects. 2021 was also the year for the first commercial projects.

The recent developments this year in 2023 have enabled the creation of ZK proofs for the entire microprocessor architecture (RISC-V), which, in turn, has enabled running the entire EVM inside it. Generating proofs for everything running on EVM. This is a major paradigm shift.

Overview of ZK-VMs. Source: “Analysis of zkVM Designs” (ZK10), 10th Sep 2023, by Wei Dai & Terry Chung.

focus on ZK-rollups, Multi-proving and ZK-VMs

In this article, we explore both of these topics: Multi-proving in the context of Ethereum L2 rollups, ZK-VMs, and how they relate to each other. ZK-VMs and general-purpose verifiable computation are new paradigms we'll explore.

We'll also examine the most important projects and companies in the ZK-VM space: Risc Zero, Powdr, Nexus, and Lasso/Jolt.

Background info

This article is written for people curious to understand the current state of Ethereum L2 zk-rollup development, and what is ahead in terms of proving systems, focusing in ZK-VM approach.

I assume a basic understanding of Ethereum, L2, zk-Rollups, and zero-knowledge proofs.

If you are new to zero-knowledge proofs and zk-rollups, it’s better to first read my primer article on the topic here:

With these opening words, let’s go!

Table of Content

Problem: 34000+ lines of code in PSE circuits won’t be bug free for years

Rollups are on training wheels

Solution: Vitalik’s Multi-Proving idea

Option 1: High-threshold governance override

Option 2: Multi-prover

Option 3: Two-prover plus governance tie break (combination of 1 and 2)

Recap of proving systems:

SGX

Polygon zkEVM

Kakarot

ZK-VM

Other

Contestable Rollup?

ZK-VM is brings a new paradigm in ZK and scaling

What is ZK-VM?

What is RISC-V?

How does ZK-VM actually work?

Regular Virtual Machine

Zero Knowledge Virtual Machine (ZK-VM)

What are the most interesting companies in the field?

Risc Zero

Powdr

Nexus

Jolt and Lasso

Others

Performance comparison between ZK-VMs

Summary and conclusion

References

Problem: 34000+ lines of code in PSE circuits won’t be bug free for years

Screenshot of Vitalik’s presentation about Multi-Proving on 10th Oct 2022 in Rollup Day in Bogota.

Ethereum Foundation’s PSE team (Privacy & Scaling Explorations team) has been developing open-source system based on manually written circuits using Halo2 proofing system. PSE circuits are used by e.g. Taiko and Scroll, which are both L2 zk-Rollups. PSE circuits are also used by certain ZK co-processors. And naturally, PSE team is exploring ZK tech to eventually scale Ethereum L1 as well, albeit with a conservative and long timeframe.

PSE circuits contain over 34,000 lines of code (at least that was the number one year ago). These circuits are not going to be bug-free for years, as acknowledged by Vitalik and the engineers at large.

Vitalik addressed this in his presentation “Multi-Provers for Rollup Security” on 10th Oct 2022 at Rollup Day 2022. See the video embedded below.

It is complicated and hard to manually write these circuits for something as complex as EVM (which was never designed for ZK proofing). The maintenance of the code base is hard, making modifications is hard, general human readability is not easy.

Vitalik’s presentation about Multi-Proving in Rollup Day in Bogota, 10th Oct 2022

Rollups are on training wheels

This means that nearly all rollups are on "training wheels" before becoming fully decentralized. That is, either in development or overridable by governance.

L2BEAT does great work monitoring the development (Stage 0, 1, 2).

The PSE circuits are being developed further and over time will become more trustworthy. To mitigate the long time frame it takes, new methods has been proposed to guarantee the security of zk-Rollups. Let's deep-dive into Multi-Proving and Contestable Rollup design.

Solution: Vitalik’s Multi-Proving idea

Besides PSE circuits, we can use parallel proving methods.

Vitalik mentioned in his speech:

1. High-threshold governance override

2. Multi-proving

3. Two-prover plus governance tie break (combination of 1 and 2)

Since Vitalik's speech one year ago, there has been interesting development. I'll leave you to linger with a couple of acronyms: Contestable Rollup, SGX, and ZK-VMs.

Before we explore these, let's first explore Vitalik's 3 main ideas and then expand on the development since his presentation.

Option 1: High-threshold governance override

E.g., 6 of 8 or 12 of 15 guardian multi-sig. If a bug is found, guardians can override it (override the state root).

That said, governance always has a weakness: guardian selection, legal risks/responsibilities, etc.

Option 2: Multi-prover

Like Ethereum, which has multiple client implementations for extra safety, zk-Rollups could have multiple proving systems.

If one implementation has a bug, another implementation may not have one, at least in the same place. This is especially true if the implementations are created by different teams and have different technical architectures.

This is a relevant analogue, because Ethereum’s multi-client approach has saved it from attack attempts many times. Bugs has been found in many clients (both Geth and Parity), even at the same time, but the bugs have been in a different place.

Vitalik suggested combining optimistic fraud proofs and zk-proofs, and there are many variations.

As one option, Vitalik envisioned something that didn't exist back then: Compiling the Geth source code and putting it through some minimal VM, such as MIPS.

Vitalik’s words have proven to possess strong predictive force in the industry. This one-liner turned out to be no exception, as we’ll see later in the blog post ✌️

Option 3: Two-prover plus governance tie break

One big question is multi-prove aggregator's code.

You want to minimize the number of lines of code that definitely need to be bug-free. Instead of 30,000+ lines, it could be 100-200 lines of code.

Then, formally prove it. And coordinate between various projects using this same formally proved code.

One challenge is minimizing the multi-aggregators themselves. Vitalik's idea was to use a plain old 2-of-3 Gnosis Safe wallet.

The 3 wallets would be:

1st: 4-of-7 guardian multi-sig (another Gnosis)

2nd: account that confirms ZK-proofs

3rd: account that confirms fraud proofs

So, you could even utilize existing code that does the aggregation of proofs and thus reduce the surface area of code you have to trust unconditionally.

This marks the conclusion of Vitalik's presentation summary.

The rest of the thread explores developments that have happened in the last 12 months since Vitalik's speech.

Recap of proving systems

Besides PSE’s ZK proofs, optimistic fraud proofs, and governance mechanisms, there are also other proving systems that are utlized or explored by L2 teams.

SGX

One of them is SGX (Secure Guard Extensions). SGX is a Trusted Execution Environment (TEE) developed by Intel. SGX can feel like a cheat code because it runs nearly at the same speed as the computation itself. However, it relies on trust in Intel, and thus is not sufficient alone. SGX can work as part of a multi-proving system as an additional security mechanism.

Vitalik recently commented on SGX on Twitter spaces. He said essentially the same thing. SGX is good if it adds security, and bad if it adds dependency for security. E.g. running a rollup that would only utilize SGX would be a terrible idea.

If you’d like to learn more about SGX, you can check this comprehensive article.

Polygon ZK-EVM

Polygon zkEVM is L2 rollup on Ethereum, which uses its own proving system that is based on combination of SNARK and STARKs. To be clear, Polygon doesn’t use PSE circuits.

Kakarot

Kakarot is slightly a different kind of project. It is zkEVM implementation written in Cairo. It is designed to bring Solidity language available specifically for Starknet L2 rollup which traditionally supported only Cairo language for smart contract.

ZK-VM

Finally, we have the ZK-VM (Zero-Knowledge Virtual Machine) approach. In this case, we specifically refer to ZK-VM that supports entire instruction set architecture, like RISC-V. This is a truly new paradigm, something Vitalik was envisioning.

ZK-VM enables building ZK apps without having to build custom program-specific circuits.

Bobbin Threadbare summarized the benefits of ZK-VMs in four parts:

ZK-VMs are easy to use (no need to learn cryptography and ZKP systems)

They are universal (Turing-complete ZK-VM can be proof computations for arbitrary computations).

They are simple. A (relatively) simple set of constraints can describe the entire VM.

Ability to utilize recursion. (Proof verification is just another program executed on the VM)

ZK-VM is a key focus area of this article. In the rest of the article we’ll have a brief look how ZK-VM works, and what are the most interesting companies and projects in the space.

Contestable Rollup

Daniel Wang’s presentation about Taiko L2 protocol, multi-proving and contestable rollup approach.

Now that we understand multi-proving paradigm and have a glimpse of different proving systems, let’s have a look at “contestable rollup” design.

Multi-proving works best when combined with contestable rollup design.

So, what is contestable rollup? Not all blocks need a ZK proof. It might sound dramatic. It's an extension of Vitalik Buterin’s original multi-prover vision we summarized above.

Anyone can attest the validity of a transaction. Anyone can request a ZK and/or SGX proof.

This way, computational resources are initially saved from tiny/minuscule transactions, but proofs can always be requested if needed. As the name says, it's a contestable approach.

“Contestable rollup” term was coined by Daniel Wang (CEO & Co-founder at Taiko).

If you’d like to understand multi-prover and contestable rollup concepts in more detail, you can see Daniel’s video above how it all works in Taiko L2 zk-Rollup. Multi-prover part starts at 13:54.

Dispute game factory

“Dispute Game Factory” is essentially a similar concept as Contestable Roll-up on Optimism. Protolambda from Optimism discussed this approach in the recent Twitter spaces.

This allows adding more “games” or “sub-games” in the system. “Games” essentially refer to various kind of proofs, optimally both optimistic fraud proofs and zk-proofs, and various version of these.

In the same Twitter spaces, Vitalik also agreed that contestable rollup or dispute game approach is good. Vitalik stressed that the fallback mechanism of a rollup have to be robust by itself. The mechanism needs to be so solid that people would feel comfortable even if it was the only mechanism.

This concludes the first part of the blog post.

The next half of the blog post goes deeper in the ZK-VMs, especially the ones that utilize RISC-V instruction set architecture. We explore why they are a new paradigm, how they work and what are some of the most interesting companies and projects in the space.

ZK-VM brings a new paradigm in ZK and scaling

What is ZK-VM?

Generally speaking, ZK-VM is a virtual machine that can guarantee secure and verifiable computation utilizing zero-knowledge proofs.

Technically speaking, ZK-VM is a Virtual Machine (VM) implemented as an arithmetic circuit for the zero-knowledge proof system. Instead of proving the execution of a specific program (e.g., EVM, as PSE circuits aim to do), you prove the execution of an entire Virtual Machine.

As an example, currently, e.g., Taiko and Scroll L2 zk-rollups (together with the Ethereum Foundation’s PSE team) have developed circuits directly for the EVM, on top of which the smart contracts run.

With ZK-VM, instead of creating circuits specifically for the EVM program, you prove the execution of the entire microprocessor architecture, such as RISC-V.

The benefit here is that RISC-V is a widely used architecture and widely supported by compilers. You can compile programs from a number of typical programming languages like Rust, Python, C++, and so on. Sometimes these are called “frontend languages” in the ZK-VM context.

So, what is RISC-V?

RISC-V is the royalty-free open-source version of the industry-standard RISC (Reduced Instruction Set Computer) architecture. RISC-V was initiated in 2014 at Berkeley. As opposed to hundreds of instructions in CISC (Complex Instruction Set Architecture), RISC only has around 40 instructions. This makes it feasible to create ZK circuits and proofs for the entire RISC architecture. The open-source nature of RISC-V also aligns well with the values of Ethereum and decentralized blockchain systems.

The beauty here is that after you’ve generated ZK circuits for RISC-V, you can generate ZK proofs for any computer program. Wow. You can literally put the entire EVM there as it is and get a fully ZK-proofed EVM. Double wow.

Is this real? Yes, it is. However, with a caveat.

Let’s explore the difference between manually writing circuits and the ZK-VM approach. The following slide gives a good overview of the current state:

Comparing pros and cons of manually writing program-specific circuits (“Zk Circuits”, on left) and ZK-VMs (on right). Source: Wei Dai and Terry Chang.

To summarize the slide above, ZK-VMs are better in almost all aspects compared to manual circuit writing. With ZK-VMs, it's easier to modify and expand the proofing system code base, conduct auditing, and utilize a wide range of available tooling.

However, the caveat is that ZK-VMs are currently 10-100x slower.

Thus, the big question is: Can this performance be improved? The consensus in the industry is — yes — we're just taking baby steps. The performance will improve in a reasonable time frame.

The key way to improve speed is parallelization, splitting the ZK proof generation into hundreds or thousands of processors. Some companies call this splitting technology "continuations." We'll explore this later.

How does ZK-VM actually work?

Before explaining ZK-VM, let’s take a look at how a simple VM (Virtual Machine), or a state machine, works.

I’ll utilize three slides from Bobbin Threadbare’s presentations.

Regular Virtual Machine

Source: Miden VM architecture overview by Bobbin Threadbare at 2022 Science of Blockchain Conference - Applied ZK Day on September 14, 2022.

The components explained:

Inputs:

Initial State is the state of a program, e.g., the state of the Ethereum blockchain before the transactions are executed.

Program is a computer program.

Outputs:

Final state is the state after program execution. E.g. state of the Ethereum blockchain after the transactions are executed.

Now, let’s have a look how ZK-VM looks like:

Source: ZK7: Miden VM: a STARK-friendly VM for blockchains by Bobbin Threadbare at Zero Knowledge Summit (ZK7) on April 21, 2022.

The ZK-VM has two extra components: Proof and Witness.

Outputs:

Proof can be used by anyone to verify that the programs were executed correctly. It means we can get from the Initial State to the Final State without having to re-execute any of the programs. This saves a lot of computational resources.

Inputs:

Witness = aka. “secret inputs.” In the context of a blockchain transaction, it could be transaction signatures that go into the witness.

The verifier who wants to verify the correct execution of the program does not need to know this Witness.

Because the ZK-VM has access to the Witness, it is also possible to transform the other inputs (Initial State, Final State, and Programs). We can simply provide a commitment to the Initial State, Final State, and Programs.

Committment basically refers to a cryptographic technique that involves publicly committing to a specific value without revealing the value itself)

These all three go into the Witness, and then, verifier can verify the correct execution of the program.

Verifier needs to know what were the commitments to the Initial State, Final State and Programs.

But importantly, no re-execution needs to be done, and verifier doesn’t have access to what actually happened inside the execution, or to any other specific details.

In our example of scaling Ethereum, the “Program” input is the entire EVM.

Because we only use the commitments and not the actual values/data, the diagram looks like as follows:

Source: ZK7: Miden VM: a STARK-friendly VM for blockchains by Bobbin Threadbare at Zero Knowledge Summit (ZK7) on April 21, 2022.

If you have a bit extra time, I recommend watching the first 4 minutes of Bobbin’s presentation. He elegantly explains these three slides above. If you have more time, the entire 30 minute presentation is a great primer on ZK-VMs.

Obviously, this was just a scratch of the surface of how the tech works. The detailed mechanics of ZK-VM are out of the scope of this blog post.

Next, let’s explore the companies and projects working on general-purpose ZK-VMs that are utilizing RISC-V.

What are the most interesting companies in the field?

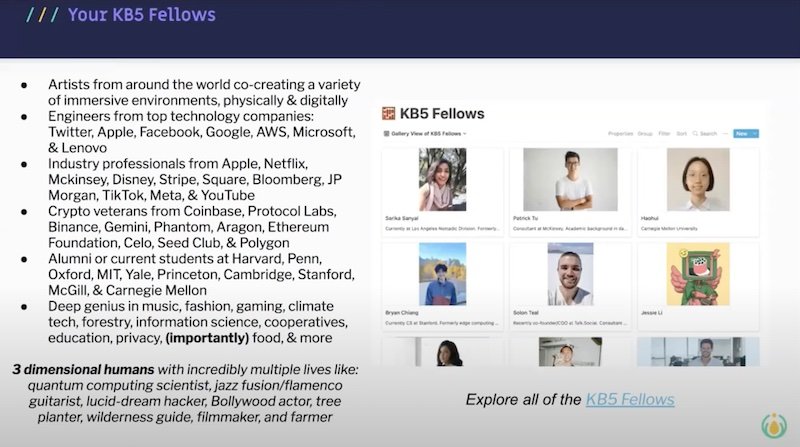

We’ll cover the following companies and projects: Risc Zero, Nexus, Powdr and Jolt/Lasso. I’ve embedded YouTube presentation from the founders.

Risc Zero

Search the 12:25 mark in the video for EVM proofing part

Risc Zero is team of over 50 people, originating from San Francisco. They’ve been around since 2021 and have raised 40 MUSD in Series A funding, in addition to 14 MUSD of previous rounds.

Risc Zero has developed a general purpose ZK-VM.

In August 2023, they gained plenty of publicity by proving an EVM block utilizing their RISC-V-based ZK-VM. This was conducted with their product named Zeth (see announcement). Zeth is an open-source ZK block prover for Ethereum built on Risc Zero’s ZK-VM. Zeth is based on Reth.

In a one-sentence summary, Risc Zero’s Zeth client can create ZK proofs for an EVM block. This was developed in just under 4 weeks by 2 engineers.

Of course, first, there was a significant effort to create the proofing system for RISC-V architecture. Once that was done, it is fairly easy to compile any piece of program in the architecture and get its execution ZK proofed. Nevertheless, it’s quite a contrast to PSE circuit development, which has taken years and is still to be completed and audited.

The caveat is, as mentioned earlier, creating ZK proofs for EVM blocks utilizing Risc Zero is still slow.

Risc Zero’s offering consists of different products, services and concepts. Let’s have a look:

Zeth is an open-source ZK block prover for Ethereum built on Risc Zero’s ZK-VM.

Bonsai is proofing as a service (essentially a SaaS service). Bonsai is a ZK co-processor for Ethereum. Bonsai allows you to request verified proofs via an off-chain REST API interface or on-chain directly from smart contracts.

Continuations is a feature that means parallel computing of ZK proofs in multiple machines/GPUs. This can significantly scale computation, however, it comes with overhead cost, as high as 50%. I’d imagine improving parallelization algorithms will be Risc Zero’s key area of development.

Without Continuations, proving big computations (such as EVM blocks) wouldn’t be feasible. Continuations led to the success of the Zeth project. For example, zkEVM proof is about 4 billion cycles. Continuations are used to split it into 4000 one-million-cycle segments, proving them in parallel, and finally combining them using recursive proof.

As one benchmark metric in their launch blog post, the cost of proving an Ethereum block came to around 21 USD and 50 minutes. This computation was done with 64 proving nodes using “continuations.” These numbers are expected to significantly decrease over time.

Finally, it’s interesting to see where Risc Zero will exactly develop in the future. The team has developed exciting technology. It is mostly (but not entirely) open source.

Besides Risc Zero, there are also other competent teams with a similar vision, which I’ll explore next.

Nexus

Nexus aims to create a general-purpose verifiable computing platform. Nexus also originates from San Francisco area, specifically from Stanford University, and has raised funding from Dragonfly, Alliance and SVangel.

Nexus has given one presentation at BASS (Blockchain Application Stanford Summit) on October 1, 2023 and has a simple landing website. Other than that, Nexus seems to be still in stealth mode.

Nexus ZK-VM

Nexus claims that Nexus ZK-VM can prove any programming language, any machine architecture, any arithmetization, any size (potentially infinite), and finally, use recursive proofs to aggregate and parallelize. They call this Nexus Network.

Founder Daniel Marin underlines in the presentation the importance of seeking to prove arbitrarily sized computations — extremely large computations, that has never been done before at this scale.

Nexus focuses on RISC-V architecture, similar to Risc Zero.

Nexus Network

Nexus Network is a decentralized market for verifiable computation. It enables decentralized computation of zero-knowledge proofs to enable verification of any computer program.

Technically, it is a system that enables the creation of multiple proofs (with multiple inputs) and aggregates these proofs together using recursion in a way that a single small proof can be succinctly and efficiently verified, attesting to the correct execution of multiple functions with multiple different inputs.

Every stateful system (e.g., rollups, smart contracts) can outsource proof generation to the stateless Nexus network.

Early performance stats (demo):

In the video above, they provided a demo of calculating the first 1000 Fibonacci numbers and generating a proof using Nexus ZK-VM.

Computing Fib(1000) on EVM amounts to around 2,000,000 Gas, which is around 160 USD on the EVM, with the recent ETH token prices.

If you run Fib(1000) on Nexus and verify its execution on Ethereum, it amounts to around 20 USD. This is a fixed cost for all N in Fib(N). Obviously, the proof generation takes time and has an additional cost, but this is outside of the EVM.

Finally:

While most of the details of Nexus are still to be published later, we can see from the website that Nexus has attracted some serious talent. For example, Jens Groth is their Chief Scientist, known for the Groth16 proving system and being the inventor of pairing-based zkSNARKs. Considering this, I can sense there might be some cryptography innovations cooking.

Nexus seems to have big ambitions and talent to support that. Nexus is also aiming for the Incrementally Verified Computation (IVC) space at large, providing use-cases also outside of the blockchain space.

PS. Nexus's presentation listed key cryptography/proof system research papers like IVC, Nova, SuperNova, CCS, HyperNova, and Protostar. I compiled them into this PDF for my convenience, with the first 7 pages containing abstracts in chronological order. It's a handy way to study the timeline of these innovations, and recognize how few recurring authors are behind them.

Powdr

Powdr: a modular stack for zkVMs, by Christian Reitwiessner at Zero Knowledge Summit 10 on Sept 20th 2023 in London.

Powdr is a remote team of around 10 people, originating from Berlin, funded by grants from the Ethereum Foundation.

Let’s clarify what Powdr is not: Powdr is not a ZK-VM.

Instead, with Powdr, you can build ZK-VMs.

Powdr creates a toolkit and a modular stack to build ZK-VMs.

The ZK part in the ZK-VM is not the most important aspect for Powdr. Powdr is more focused on the verifiable computation aspect.

Powdr aims to develop ZK-VMs that generate proofs of program execution faster to verify than rerunning the execution in the first place.

Recently, numerous ZK-VMs have been created (Taiko, Scroll, Polygon zkEVM, Risc Zero, LLVM, nil zkLLVM, Valida, fluentlabs, Polygon Miden & Zero, zkSync, Cairo). Usually, they are custom-made. See the slide below.

Source: Powdr: a modular stack for zkVMs, by Christian Reitwiessner at ZK10 on Sept 20th 2023 in London.

According to Powdr, there is one advantage in custom-built ZK-VMs, which is “probably” performance.

However, there are disadvantages in custom-built ZK-VMs:

Hard to audit

Hard to change

Requires lots of effort to build and maintain

Prover (and proof-system) specific

Difficult to reuse

In a way, Powdr aims to be the LLVM (Low-Level Virtual Machine) for ZK-VMs.

Powdr wants to develop a system that allows you to freely change the frontend programming language and the backend proving system. A system that enables you to write ZK-VMs in high-level languages, like Rust.

See the diagram below.

On the left, you can see front-end programming languages (JS, C++, Rust, Solidity). On the right, backend proofing systems (Halo2, eSTARK, SuperNova). Powdr is the toolkit in-between and consist of powdr-ASM language and powdr-PIL language.

Source: Powdr: a modular stack for zkVMs, by Christian Reitwiessner at ZK10 on Sept 20th 2023 in London.

Powdr is a compiler stack that enables you to define a ZK-VM. In the Powdr source code, written in the Powdr PIL language, you specify the architecture and instructions for your Virtual Machine.

It allows you to abstract away all the low-level constraints and prover complexity, allowing you to build your machines in a modular way.

Powdr incorporates various layers of abstraction that facilitate optimizations and analysis. Automated analysis, such as optimization checks for non-determinism, and formal verification to ensure the implementation correctness, can be performed on the Powdr source code.

Btw, PIL language stands for Polynomial Identity Language, and originates from Polygon zkEVM, with Powdr’s own modifications and development.

Powdr’s goal is to make it easy to build, test, and audit Zero Knowledge Virtual Machines.

It appears that Powdr also has heavyweight co-founders. Christian Reittwiessner, a co-inventor of the Solidity language, is among them. Powdr is entirely open-source and does not have any investors, unlike Risc Zero and Nexus.

JOLT and Lasso

Video above: 3 min primer in Lasso. Source: Lasso in a Nutshell, by Justin Thaler in a16z crypto event

Also see, 3 min primer of Jolt: The Lookup Singularity with Justin Thaler.

Jolt and Lasso are a bit different from the previous products and companies.

Jolt and Lasso are more like research projects at the moment. These two products are created by the same team and closely relate to each other.

Lasso is a new, more performant “lookup argument”. Lasso is utilized by Jolt, which is a new approach to building ZK-VMs. Let’s examine more closely.

LASSO stands for Lookup Arguments via Sum-check and Sparse polynomial commitments, including for Oversized tables.

Hah! Cryptographers never disappoint me with their creative naming schemes.

In short, Lasso proposes a new method to speed up ZK systems. This method is a new “lookup argument,” a fundamental component behind ZK-SNARKs. They estimate a roughly 10x speedup over the lookup argument in the popular Halo2 toolchain, and a 40x improvement after all optimizations are complete.

See Lasso’s research paper and Github.

JOLT stands for Just One Lookup Table.

Jolt is the second project of the team. Jolt introduces a new approach to building ZK-VMs that uses Lasso. Currently, it is only a research paper and will later release a version in open source code.

Jolt describes a new front-end technique that applies to a variety of instruction set architectures (ISAs). They realize a vision, originally envisioned by Barry Whitehat, called “lookup singularity.” The idea is to produce circuits that only perform lookups into predetermined lookup tables that are gigantic in size, more than 2^128. Validity of these lookups is proved utilizing the new lookup argument Lasso. All these avoid costs that grow linearly with the table size.

See Jolt’s research paper "SNARKs for Virtual Machines via Lookups" and Github.

The interesting thing with Lasso and Jolt is that these open-source projects are co-written by venture capital company Andreessen Horowitz (A16Z), together with prominent researchers in the field. The motivation for A16Z to participate in research stems from the fact that they are heavily invested in blockchain and cryptography companies that utilize ZK. Supporting ZK research can speed up the performance of the ZK systems used by A16Z’s numerous portfolio companies.

Gigabrains behind Jolt and Lasso are well-known names in cryptography research: Arasu Arun, Srinath Setty, and Justin Thaler.

There is a set of 7 presentation in this YouTube list that covers the technical details of Jolt and Lasso.

Others

Beyond RISC-V, there are also other ZK-VM teams utilizing other instruction set architectures.

I decided to narrow down my blog post only to the team with RISC-V approach. Please let me know if I missed any. For other teams, you can find some of them mentioned in the next chapter when I summarize presentation about performance comparison between ZK-VMs.

Performance comparison between ZK-VMs

Wei Dai and Terry Chung gave probably the most information-dense and well-prepared presentation about the analysis of ZK-VM designs and performance comparison ever, in the ZK10 conference.

The first part of the presentation is a great overview which I recommend to watch. The second part you need to be an experienced ZK engineer to understand everything. They summarize lessons learned from design differences and spark discussions of finding more efficient arithmetizations and zk-specialized instruction set architectures.

Wei and Terry touched upon three category of projects:

RISC-V related: Risc Zero, Powdr, Jolt

WASM related: zkWasm, Wasm0

EVM related: Scroll, Polygon and Zeth

ZK-specific instructions set related: Cairo, Triton, Miden and Valida.

Here is my summary of their main conclusions in the presentation. And please don’t worry if you don’t understand everything. I warmly welcome you to the imposter club! :) These conclusions mostly make sense for ZK engineers who are deep in the nuts and bolts.

On the ISA front, there are efficiency gains to be made if we eliminate local data movement

Arithmetizations: It is clear that with more efficient SNARK-based hash functions, FRI can gain a lot of efficiency in terms of the recursive complexity.

Minimizing the number of cells committed per instruction should be a goal. Many times there are certain cells that are carried over repeatedly across different cycles, such as registers.

There are techniques to improve lookup protocols that are applicable to all VMs. For example, a lot of Halo2 VMs still use Halo2 lookup argument, which can be replaced by more recent and faster lookup arguments.

Finally, for PCS (Polynomial Commitment Schemes), we should have better benchmark frameworks to do comparisons.

Libraries are needed that frontends can depend on. Currently, every single STARK or SNARK library has to code its own Polynomial Commitment Schemes.

The summary slide from the presentation: STARK-VM Spec Sheet and Halo2-based VMs:

STARK-VM Spec Sheet

Halo2-based VMs

If you're not a full-time ZK engineer, I warmly suggest you to just skip the slides above. In case you'd like to understand more, the video presentation runs through the data of these slides one-by-one. For more ZK benchmarking, you can also have a look at the ZK Bench website..

Summary and conclusion:

In this article, I explored why a multi-prover approach is needed for ZK proof generation in certain Ethereum L2 rollups. In short, there is a need for an additional proofing system besides PSE circuits that can run in parallel. This is necessary because it will take years before PSE circuits can be considered bug-free. We briefly touched upon different proving systems, such as PSE circuits, SGX, and especially delved deep into ZK-VMs.

ZK-VMs have existed since 2013, and a significant innovation occurred this year in 2023. ZK proofs were successfully generated for an entire RISC-V microprocessor architecture. RISC is an architecture supported by a large number of compilers and frontend languages (e.g., Rust, C++, Python, etc.). It's possible to compile nearly any program to RISC-V and generate ZK proof of the execution trace of the program. This marks a significant paradigm shift in the industry.

In the Ethereum space, it means we can put the entire EVM inside RISC-V and have proofs generated for it effortlessly. The advantages include easier auditing and ensuring system security compared to manually written program-specific arithmetic circuits. The caveat is that currently, ZK-VM systems are 10-100x slower than program-specific proofing systems, but performance is expected to improve.

The truly fascinating future development of ZK-VMs might extend beyond the blockchain space. When ZK-VMs become more performant, there are opportunities in IVC: Incrementally Verifiable Computation, which so far has mostly been a theoretical area of cryptography research.

IVC means verifying the correct execution of computer programs at a large scale. For example, as more computation is outsourced to the cloud (which is increasingly happening), questions arise about trusting cloud computation services, especially in critical applications. IVC explores how we can generate proofs of correct execution on a massive scale for computer programs of any size. This still requires certain leaps in cryptography research and recursive proof performance, which is well on its way. ZK-VMs of today is only a tiny glimpse of what is coming in just a few years.

What are still some open questions?

In the article, we also introduced four companies/projects in the ZK-VM space that specifically utilized the RISC-V approach: Risc Zero, Nexus, Powdr, and Jolt/Lasso.

One of the open questions is, for example, about business models and open-source licensing and how these two fit together. For instance, Risc Zero has open-sourced most of its code base (but not all). They generate revenue by offering access to their SaaS proof generation cloud service, which also provides scaling through computation parallelization.

From the point of view of decentralized blockchains, such as Ethereum rollups, these services can function as one provider of the Multi-Prover approach and bring extra security to a rollup. This is great news.

However, relying on a centralized and partly closed-source proofing system doesn’t entirely align with the values of Ethereum. While decentralization in proof systems is not nearly as important as decentralization of the blockchain’s consensus layer, it still matters. Thus, I believe there will be a demand for sufficiently decentralized and fully open-source proofing systems. That said, the dilemma with a fully open-source and decentralized approach might be finding enough capital and thus attracting talent.

Moreover, the need for computation power in the future will be so massive that an entirely new industry of "proof markets" can emerge. How to connect idle (yet powerful) hardware to those in need of ZK computation and willing to pay for it? Certain companies are already exploring this.

Multi-proving unites both optimistic and zk-rollups

The traditional divide between Optimistic and ZK Rollups also appears to converge in the long term. The multi-prover approach seems to unite them both. ZK-Rollups are exploring optimistic approaches to save computation resources. Similarly, for instance, Optimism is collaborating with two external companies to bring both RISC-V and MIPS-based ZK-VM proofing systems into play, generating additional proofs for their rollup.

How about L1, will the "Enshrined Ethereum" adopt a multi-proving approach?

"Enshrined Ethereum" refers to the long-term dream of bringing ZK proofing directly to the L1 mainnet. The ZK tech is still not nearly battle-tested enough for this to become a reality in the coming years. However, it's worth exploring how this 'endgame' would look.

Vitalik addressed this in his recent article titled "What might an 'Enshrined ZK-EVM' look like?". One clear tradeoff space is an 'open' or 'closed' approach. Hardcoding proving system choices inside the protocol would be technically easier but would go against Ethereum's open nature, regressing Ethereum from being an open multi-client system to a closed multi-client system. That being said, engineering an open system, much like it's possible for anyone to develop and use any execution client implementation, poses an additional engineering challenge.

Finally, thank you for reading the article.

I hope you enjoyed reading it as much as I enjoyed researching the topic and writing about it. It’s such a privilege to be able to research this space. Years of academic ZK research are finally entering the real world with only a relatively small number of people truly understanding their potential.

I predict that years later, school textbooks list the top five crypto innovations as follows: First there was Bitcoin, then Ethereum and smart contracts, followed by Zero Knowledge Proofs and ZK-VMs.

I hope you learned something new. If you did, consider sending me a message with your favorite emoticon, it’ll make my day!

- Mikko

THANK YOU

I’ve done a lot of research and reading while writing this article.

Credits especially to Vitalik Buterin, Bobbin Threadbare, Wei Dai & Terry Chung, Daniel Wang, Lisa Akselrod, Justin Thaler, Daniel Marin, Christian Reitwiessner & Leonardo Alt, Brian Retford, Diederik Loerakker (Protolambda), Koh Wei Jie and Suning Yao. And special mention to Srinath Setty and Abhiram Kothapalli who seem to be the scientists behind most of the research papers.

My article rests on the shoulders of your writings and presentations for the most part. I’ve provided the full reference list below.

References:

A rollup-centric ethereum roadmap, by Vitalik Buterin, Oct 2020

The different types of ZK-EVMs, by Vitalik Buterin, August 4, 2022

Twitter spaces panel: “Multi-proofs for rollups: A nice-to-have or a necessity?“, by Vitalik Buterin, Brecht Devos, Protolambda, Umede.eth, on December 13, 2023

What might an “enshrined ZK-EVM” look like?, by Vitalik Buterin, on December 13, 2023

Zero-knowledge Virtual Machines, the Polaris License, and Vendor Lock-in, by Koh Wei Jie, Mar 12th, 2021

What are zkVMs? And what's the difference with a zkEVM?, by David, July 2022

Proof of Meow: how ZK-VM works, by Lisa Akselrod, August 28th, 2023.

Why multi-prover matters. SGX as a possible solution, by Lisa Akselrod, September 7, 2023.

Risc Zero introduces ‘Type 0’ zkEVM to make zero-knowledge tech more accessible, by Bessie Liu, August 22, 2023.

Announcing Zeth: the first Type Zero zkEVM, Risc Zero, August 22, 2023

The Aleo Advantage: Evolving from zkEVMs to the zkVM blockchain, by Kathie Jurek, September 5, 2023

=nil; zkLLVM Circuit Compiler, Proving computations in mainstream languages with no zkVM's needed, by Nikita Kaskov and Mikhail Komarov, February 2nd 2023

Answering the call: How RISC Zero and O(1) Labs are bringing ZK proofs to the OP Stack, by Optimism, November 2nd 2023

Zero-knowledge Virtual Machines, the Polaris License, and Vendor Lock-in, by Koh Wei Jie, March 12th 2021

VC Firm a16z Wades Into Crypto Tech Research with ZK Projects ‘Jolt’ and ‘Lasso’ , by Sam Kessler at Coindesk, 11 August 2023

Approaching the 'lookup singularity': Introducing Lasso and Jolt, by Justin Thaler, 8 Oct 2023

More ZK related learning resources:

Awesome Zero Knowledge - Curated list of ZK learning material

Bookmarks: relevant for ZK researchers — More ZK learning materials

zk-Bench — Comparative Evaluation and Performance Benchmarking of SNARKs

YouTube videos used in the article:

Miden VM: a STARK-friendly VM for blockchains, by Bobbin Threadbare, April 21st 2022

Nexus Labs presentation at BASS Stanford, by Daniel Marin, Aug 27th 2023

Powdr: a modular stack for zkVMs, by Christian Reitwiessner, September 20th 2023

Whitepapers and/or DOCS:

Risc Zero whitepaper, by Jeremy Bruestle, Paul Gafni & RISC Zero Team, Aug 11th 2023

Nexus — not published yet

Jolt by Arasu Arun, Srinath Setty, Justin Thaler

Lasso by Srinath Setty, Justin Thaler, Riad Wahby

Incrementally Verifiable Computation (IVC)

I compiled all the whitepapers below in this handy PDF, as mentioned in Nexus presentation.

[Val08] Incrementally Verifiable Computation or Proofs of Knowledge Imply Time/Space Efficiency, by Paul Valiant, 2008 March

[KS22] Nova: Recursive Zero-Knowledge Arguments from Folding Schemes, by Abhiram Kothapalli Srinath Setty Ioanna Tzialla, 30th June 2022

[KST22] SuperNova: Proving universal machine executions without universal circuits, by Abhiram Kothapalli, Srinath Setty, Dec 22nd 2022

[KS23a] Customizable constraint systems for succinct arguments, by Srinath Setty, Justin Thaler, Riad Wahby, May 3rd 2023

[NBS23] Revisiting the Nova Proof System on a Cycle of Curves, by Wilson Nguyen, Dan Boneh Srinath Setty, June 20th 2023

[KS23] HyperNova: Recursive arguments for customizable constraint systems, by Abhiram Kothapalli, Srinath Setty, Aug 4th 2023

[BC23] Protostar: Generic Efficient Accumulation/Folding for Special-sound Protocols, by Benedikt Bünz, Binyi Chen, Aug 20th 2023